Invisible AI: The Evolution of User Interfaces for AI

How structured actions, and predictive systems are reshaping the prompt base user interface

Updated on Saturday, May 31, 2025

The rise of Large Language Models (LLMs) like GPT-4 has fundamentally changed the way users interact with computers. At the forefront of this revolution was the prompt-based user interface: a simple text box where users input their intent and received intelligent responses.

While this method proved powerful and versatile, over time, it also started to feel constrained and repetitive. Users found themselves caught in the loop of crafting better and better prompts, chasing clearer outputs in a linear exchange.

Yet, this prompt box remains a crucial bridge between human intention and AI capability. It democratized AI access, allowing anyone with a keyboard to tap into vast cognitive power. But as AI matures, so do the expectations of user experience. We’re now entering an era where the interface doesn’t just wait—it predicts, adapts, and integrates seamlessly into our workflows.

From Typing to Talking: The Rise of Voice Interfaces

Voice has emerged as a natural evolution beyond typing. Voice assistants like Google Assistant, Amazon Alexa, and even ChatGPT’s voice mode enable users to communicate with AI in a more fluid, human way. This removes the need for writing perfect prompts, making the experience more conversational and spontaneous.

For example, imagine asking your smart speaker: “What’s a quick, healthy dinner I can make in 20 minutes?”—and receiving a spoken recipe tailored to your pantry and diet. Voice interfaces bring context and personalization into play, and when backed by LLMs, they’re capable of handling complex queries with nuance.

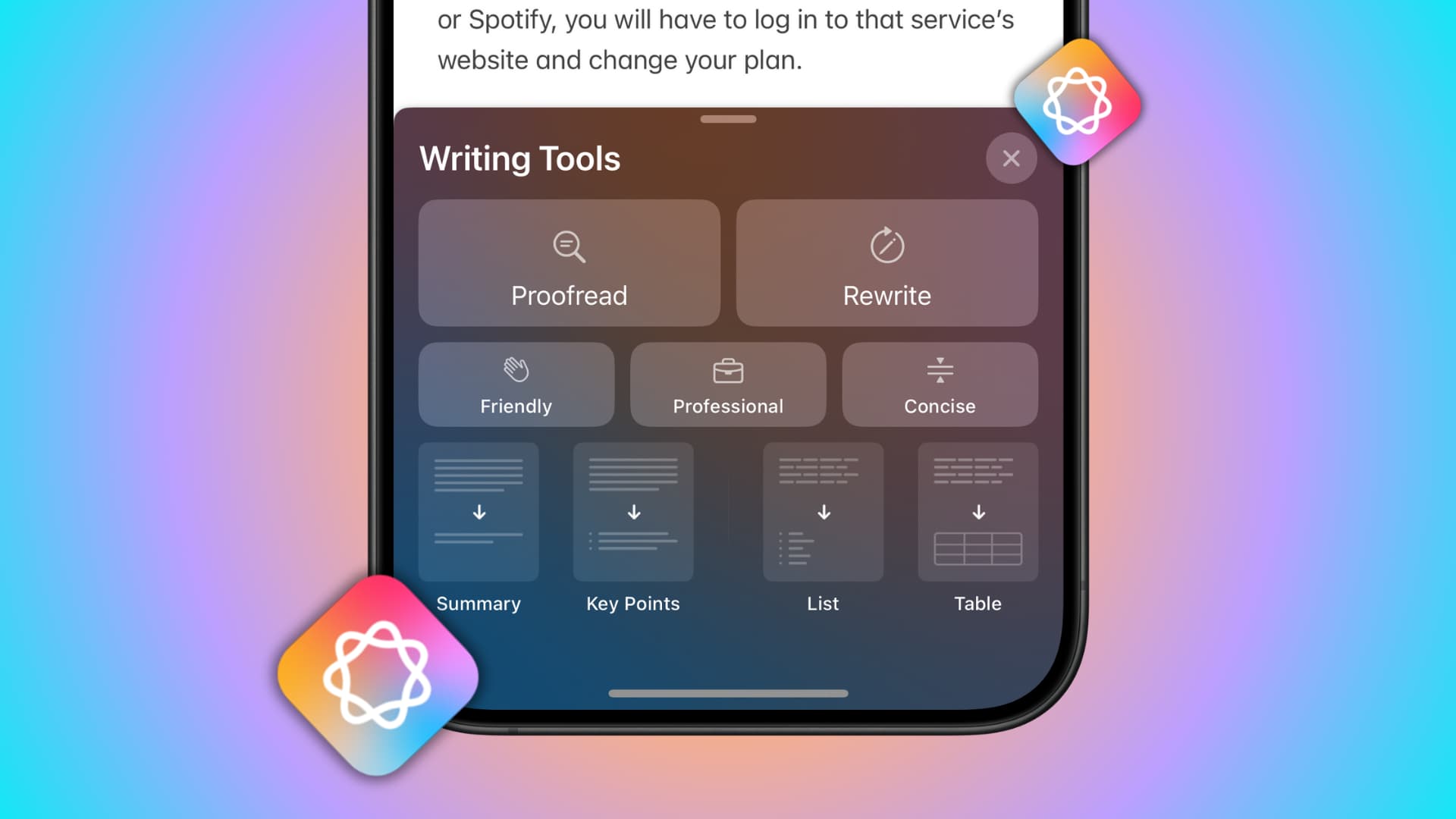

Structured AI Interfaces, Contextual Magic As AI

A simplest form of structured AI user interface is used in Apple Intelligence Writing Tools, As AI becomes more integrated into productivity tools, structured elements are emerging—buttons labeled “rewrite,” “summarize,” or “make this shorter” are becoming ubiquitous. These interfaces hide the complexity of prompts behind intuitive actions. The user clicks, and AI acts.

Instead of typing out “Write a social media caption for this blog post,” a user simply highlights text and selects “Generate Caption.” The LLM interprets the content contextually and produces copy on the fly. Similarly, UI elements are now being enhanced or even rearranged with AI, offering smart suggestions, auto-filled forms, and dynamic titles based on usage patterns.

These structured interfaces allow AI to blend into the design, making creativity faster and more accessible without overwhelming users with prompt engineering.

Invisible Interfaces: Embedded AI That Anticipates Needs

The next leap is perhaps the most transformative: interfaces that are invisible until needed. These systems monitor context—your location, recent activity, calendar events, or browsing patterns—to surface assistance without explicit commands.

For instance, an AI email client might suggest a meeting summary draft just as you’re about to reply to a thread. Or a personal finance app could notify you of irregular spending patterns and draft a recommendation on how to adjust. These systems use predictive models and behavioral patterns to preemptively serve user needs.

These interfaces remain out of sight until the moment is right, leveraging signals like location, activity patterns, and digital artifacts to anticipate intent. Instead of navigating menus or issuing commands, users are met with timely, contextual assistance that feels natural and non-intrusive.

LOATA’s Quick Insight feature. Rather than waiting for a user to search or manually extract information, Quick Insight continuously scans notes and documents in the background, identifying high-signal data points—phone numbers, dates, locations, invoice totals, prices, and more. These extracted details are surfaced only when relevant, acting like a mental shortcut. Whether you’re reviewing a meeting note or prepping for a client call, Quick Insight unobtrusively presents what you need, when you need it, embodying the core idea of embedded AI that works invisibly but meaningfully in the background.

The Shift from Command to Collaboration

What we’re witnessing is a shift in the human-computer relationship. It’s no longer about giving explicit commands—it’s about co-creating experiences. The interface is no longer the bottleneck but a creative partner.

In this new era, AI interfaces won’t ask, “What do you want me to do?” They’ll say, “Here’s what I think you need—shall we proceed?”

Just as graphical user interfaces (GUIs) emerged alongside command-line interfaces (CLIs) in the 1980s—transforming computing from a domain of typed commands into a more visual, accessible experience—we’re now witnessing a similar shift with the rise of invisible AI-powered interfaces. These new interfaces are emerging alongside prompt-based systems like chatbots and LLM tools, but they represent a deeper integration into our digital environments. While prompt interfaces require users to articulate their needs, invisible AI interfaces anticipate them, offering assistance seamlessly based on context. In the future, you may interact with AI without ever seeing a text box—just as modern users rarely engage with the terminal in macOS or Linux unless working on specialized tasks.